What's new?

Last year, we saw a lot of interest in the use of LLMs for new use cases. This year, with more funding and interest in the space, we've finally started thinking about productionizing these models at scale and making sure that they're reliable, consistent and secure.

Let's start with a few definitions

- Agent : This is a LLM which is provided with a few tools it can call. The agentic part of this system comes from the ability to make decisions based on some input. This is similar to Harrison Chase's article here

- Evaluations : A set of metrics that we can look at to understand where our current system falls short. An example could be measuring precision and recall.

- Synthethic Data Generation: Data generated by a LLM which is meant to mimic real data

How do I see this coming together?

- As we deploy more LLMs in productions, we'll start using more systems with agentic behaviour.

- This will be a complex process so we'll start developing more sophisticated evaluations to understand each component of this process.

- And ultimately when we want to invest time into improving capabilities of specific components of these systems, we'll use synthethic data to make sure our system is robust and reliable.

Let's see what each specific component looks like.

Agents

In my opinion, what separates an agent from a mere workflow is the orchestration. Workflows are going to be simple processes (Eg. Extract this data from this chunk of text) that have clearly defined inputs and outputs. Agents on the other hand, have multiple choices to be made at each round, with more fuzzy goals such as "Help implement this new feature".This is big because now we no longer need to laboriously code the logic for each of these decisions. We can instead just provide a model with some context and let it make decisions.

The end of BabyAGI

Libraries such as BabyAGI and Langchain enabled people to chain together complex iterations. But, these agents often lacked conconistency and reliability with the open ended ReACT methodology.instead of viewing agents as these autonomous intelligent processes, we'll instead be seeing a lot more of them deployed as extensions of existing workflows.

An example would be Ionic Commerce which is helping build agents for e-commerce. The key takeaway here from their talk was that they want to provide agents more context on the item directly from the merchant and allowing them to directly make purchases.In short, instead of training a vLLM to be able to click a button, we can instead just give it more context and an api to call to make a decision/purchase.

Evaluations

Evaluations were a huge thing this year. Last year everyone was excited about deploying these models into production but this year, we're seeing a lot more focus on the evaluation of these models and how to make them more reliable and trustworthy.

We're deploying them at a larger scale with more complex scaffolding and it's tough to make a decision on which to choose without evaluations to understand the trade offs. But what's made it difficult to deploy has been the open ended nature of LLM generations.

What makes Evaluations Difficult?

We can reduce a lot of the scaffolding around LLM applications to traditional machine learning metrics. The most classical case is the new viewpoint of retrieval in RAG as just information retrieval with recomendation system. This makes our life a lot easier.

But, LLMs can generate any form of open-ended text. This means that it's a lot more difficult to evaluate the quality of the output on dimensions such as factuality and consistency.

Evaluations in Production.

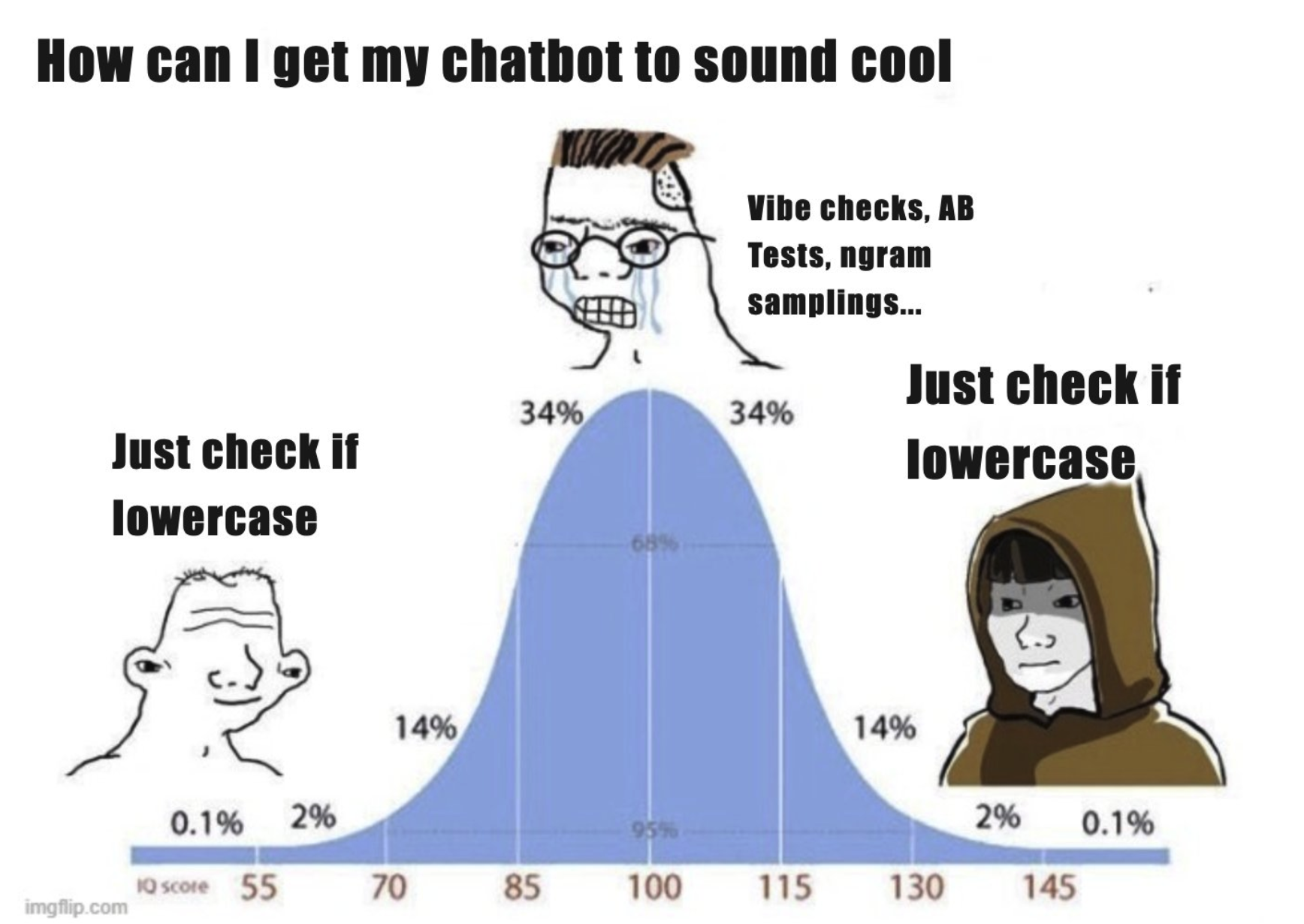

What I found interesting was Discord's approach to evaluations when they built a chatbot for 20M users. At that level, even small edge cases become highly probable. Therefore, in order to ensure that evaluations were robust, they focused on implementing evaluations that were easy to run locally and implemented quickly. This was released as their new open-source library PromptFoo.A great example of this was how they checked if the chatbot was being used casually by seeing if the message was all lowercase.

I also particularly liked the approach taken by Eugene Yan and Shreya SHankar which focused on breaking down complex evaluations into simple yes/no questions. This made it easier to train models and understand results.

Ultimately, these new ways of evaluating AI will help create better, safer AI systems that work well in the real world.

Synthethic Data

Generating Synthethic Data is easy, generating good synthethic data is hard.

Learnings from the Conference

One of my favourite talks at the Conference was by Vikhyatk who talked about the Moondream model that he trained. Moondream is a small vision model, with ~1.5 billion parameters. That's significantly smaller than most models these days. They trained the model with a significant of synthethic data and shared a lot about their insights in generating the training data. If I had to summarize it, it would be generating diverse outputs and having absurd questions. This helps to create a richer and more diverse dataset for training.In their case, they did so by injecting unique elements into each prompt ( in their case it was the image alt text for each image ) using some form of permutation. They also generated some absurd questions to help the model learn when to refuse certain questions.

However, when using Mixtral to generate absurd questions, they found that it tended to always generate questions that were about Aliens and Dinosaurs. So, make sure to always look at the questions you're generating!

These strategies contribute to creating more robust and versatile models that can handle a wide range of inputs and scenarios.

Structured Extraction

I believe that Structured Extraction is the key to integrating all of these components together. Structured Extraction simply means that we validate the outputs we get out of a LLM.

This could be as simple as getting a python object with the right types and properties. But, this is a huge innovation!

- It makes multi-agent workloads possible : With validated outputs, it becomes easier for agents to communicate with each other, not unlike traditional microservices of the past

- It makes evaluation easier : With outputs that are in a consistent format and have well defined schemas, it's easier to log the results of different agents. This makes evaluation significantly easier

- Generating Synthethic Data is better : With well defined schemas, it's easier to generate synthethic data. This is especially important when we want to test the robustness of our systems.

Imagine parsing the raw chat completion from any model, that's simply not tenable and scalable anymore.

Conclusion

I hope you found this article useful! I'd love to discuss and hear your thoughts on this topic.